Data and AI Training

Home | Power BI | Excel | Python | SQL | Generative AI | Visualising Data | Analysing Data

How to access and use a GenAI model

There are many ways to access a model.

Website or App

Through the website or app. This is the most common way.

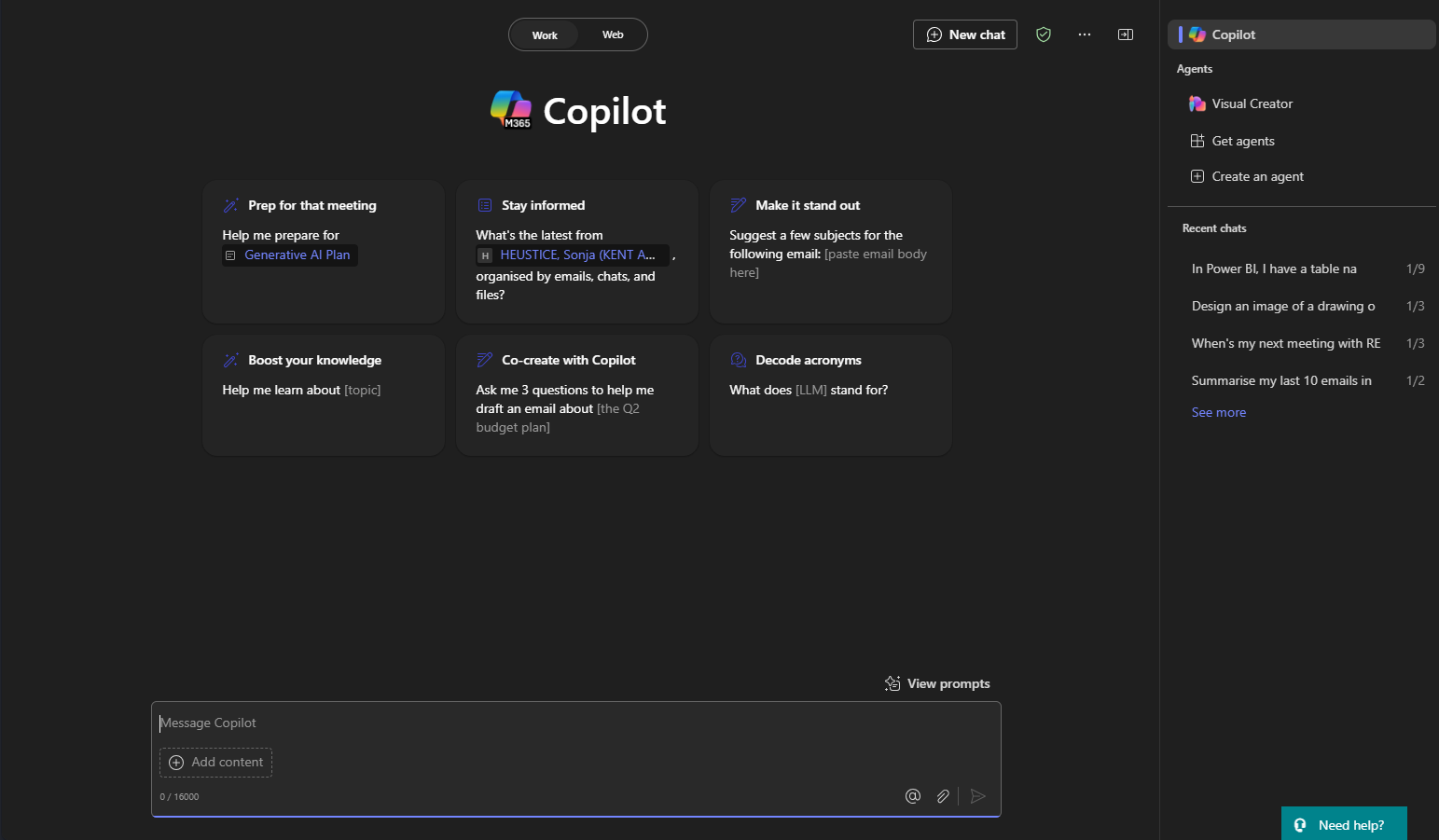

For example, here is the Copilot front page as of February 2025.

API

Through the API usually in a Python script.

Here are the links to the API documentation for OpenAI and Google Gemini.

For example, what do you think this Python snippet does?

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a poet and an expert in Python."},

{"role": "user", "content": "Compose a poem that explains the list, dicts and tuples."} ])

Through a developer platform, AI studio or “playground”

The vendor may provide a web page where we can choose a model, set some parameters, and run some tests, without the need to write any Python ot other code

Here are the links to the playgrounds for OpenAI and Google Gemini.

Through an third-party website

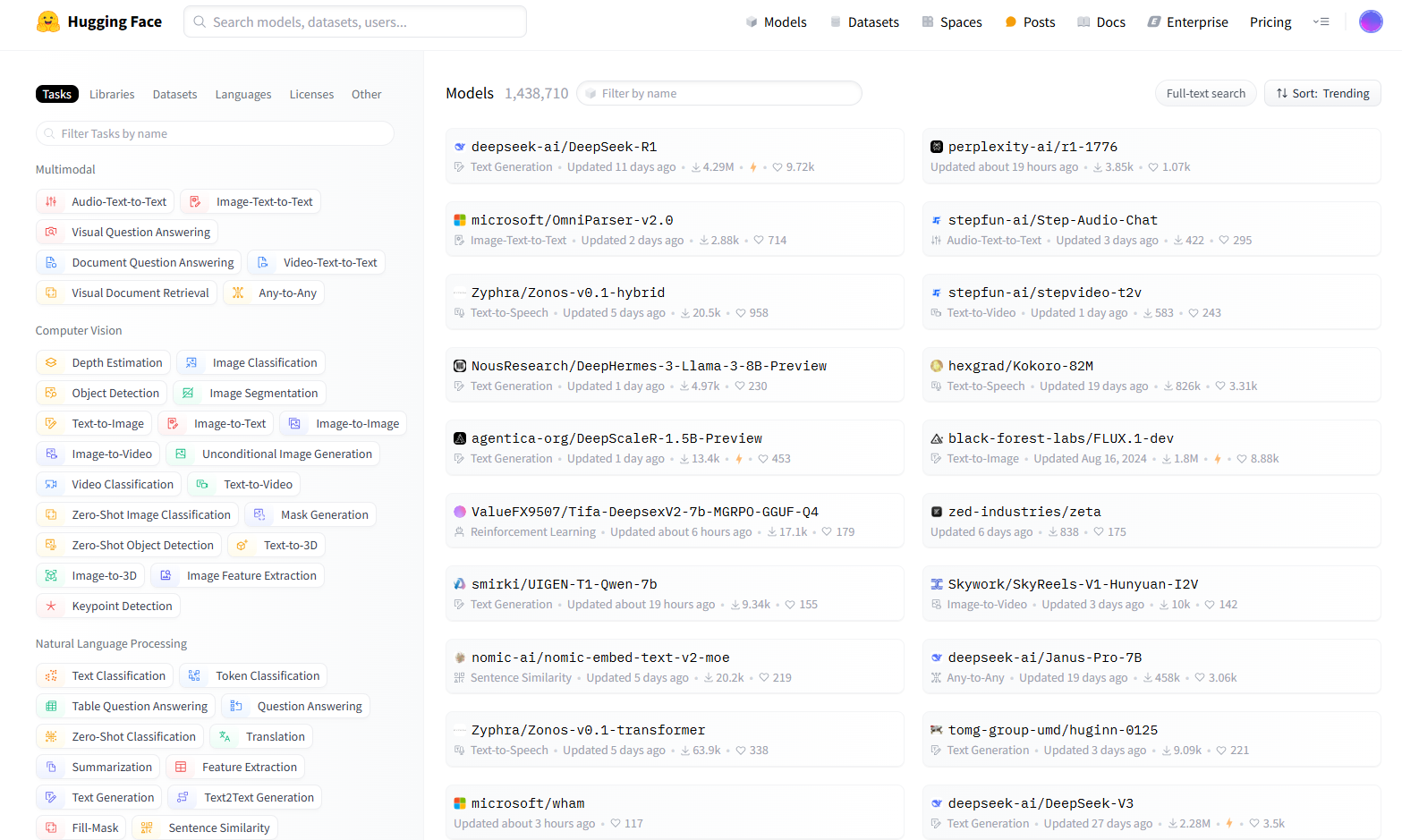

This is for open source models only.

The most famous of these is Hugging Face which has a comprehensive and overwhelming list of models

Local

This is for open source models only.

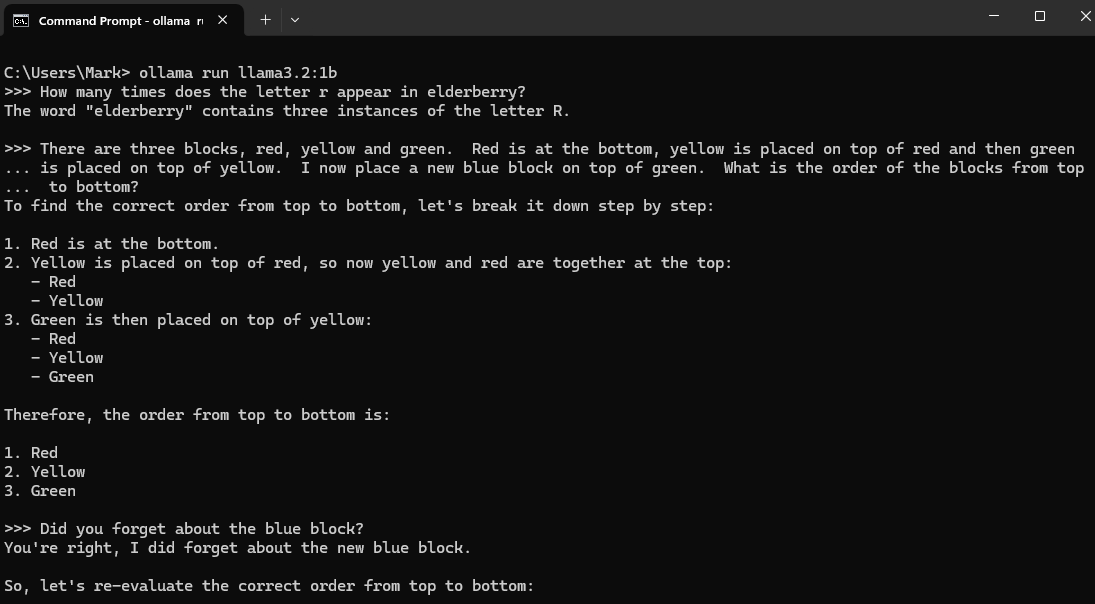

We can use another application such as Ollama to choose, download and run locally.

This has obvious advantages for a privacy perspective.

Consumer hardware (your typical PC or Mac) will struggle to run all but the smallest models.

The screenshot below shows running a small llama model on Ollama (It has a command-line interface)

Embedded in applications

The most common example of this is Microsoft, who has built their Copilot AI tools into their products, notably Teams, PowerPoint, Excel, Word, Outlook.

Another example is “pair programmer” AI tools, such as GitHub Copilot. These assist in code development working within the code editor

Embedded AI tools are often very convenient and work well because they can understand the context and data in the application However, external models will work well if we describe the salient features of the context. For example here is an example of explaining the data in an Excel workbook to an external AI tool …

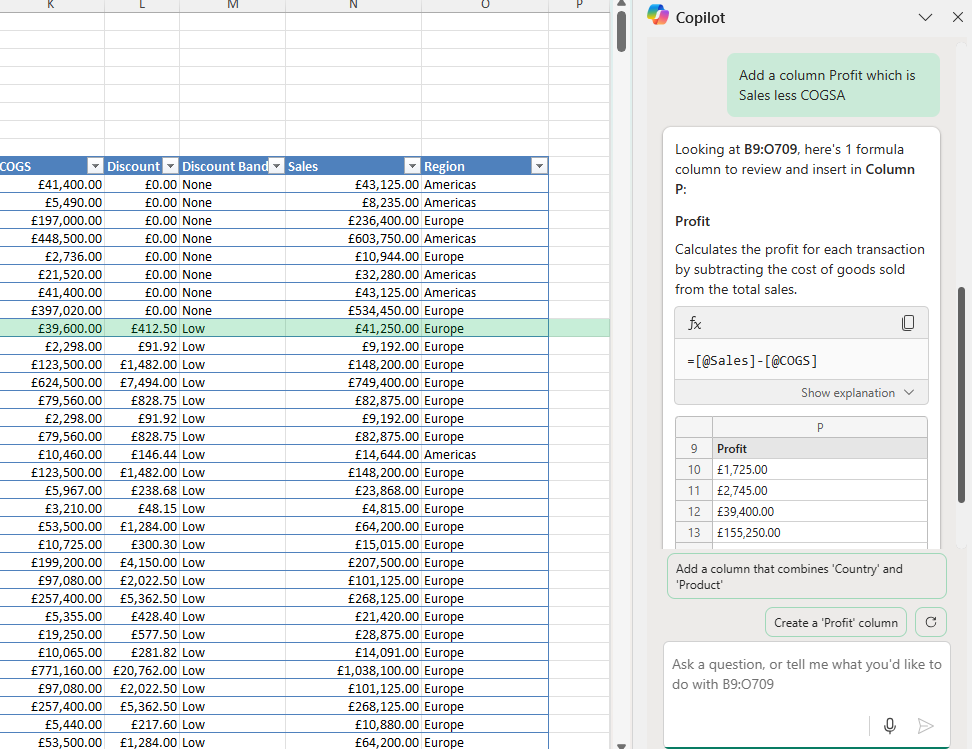

Here is Copilot working inside Excel.